We’ve spent the last eight years in HR and talent acquisition, mostly working with tech startups and mid-sized companies (50–500 employees). We started as recruiters, moved into TA management, and now oversee hiring operations across SaaS, fintech, and e-commerce teams.

Over the years, we’ve personally screened thousands of resumes by hand and using software. About 18 months ago, when the number of applications began to climb, we implemented CVShelf at our current company.

We’ve also worked extensively with enterprise ATS platforms, such as Greenhouse, Lever, and Workable, and experimented with resume parsing tools like Skillate and HireVue.

This post is for HR professionals and recruiters who want to streamline their workflows using resume screeners and gain an honest, real-world understanding of what these tools truly do.

We’ll explain what happens behind the scenes, no marketing fluff, no exaggerations, just a candid look at how resume screeners work when configured by professionals like us.

Why Resume Screeners Exist (And Why Manual Screening Doesn’t Work)

At our company, we usually have 300-500 applications for every position. Even if you have a powerful employer brand and your job descriptions are clear, most applicants just aren’t qualified.

Before we had screening tools, this meant:

- Recruiters spend hours screening out unqualified resumes

- Strong candidates are waiting longer to receive responses

- Hiring managers reviewing an inconsistent shortlist

Resume screeners were developed in order to address one major problem: scale. They don’t replace recruiters – they save us from drowning in the volume of applications.

What Resume Screeners Actually Do

Let’s clear up the biggest misconception right away.

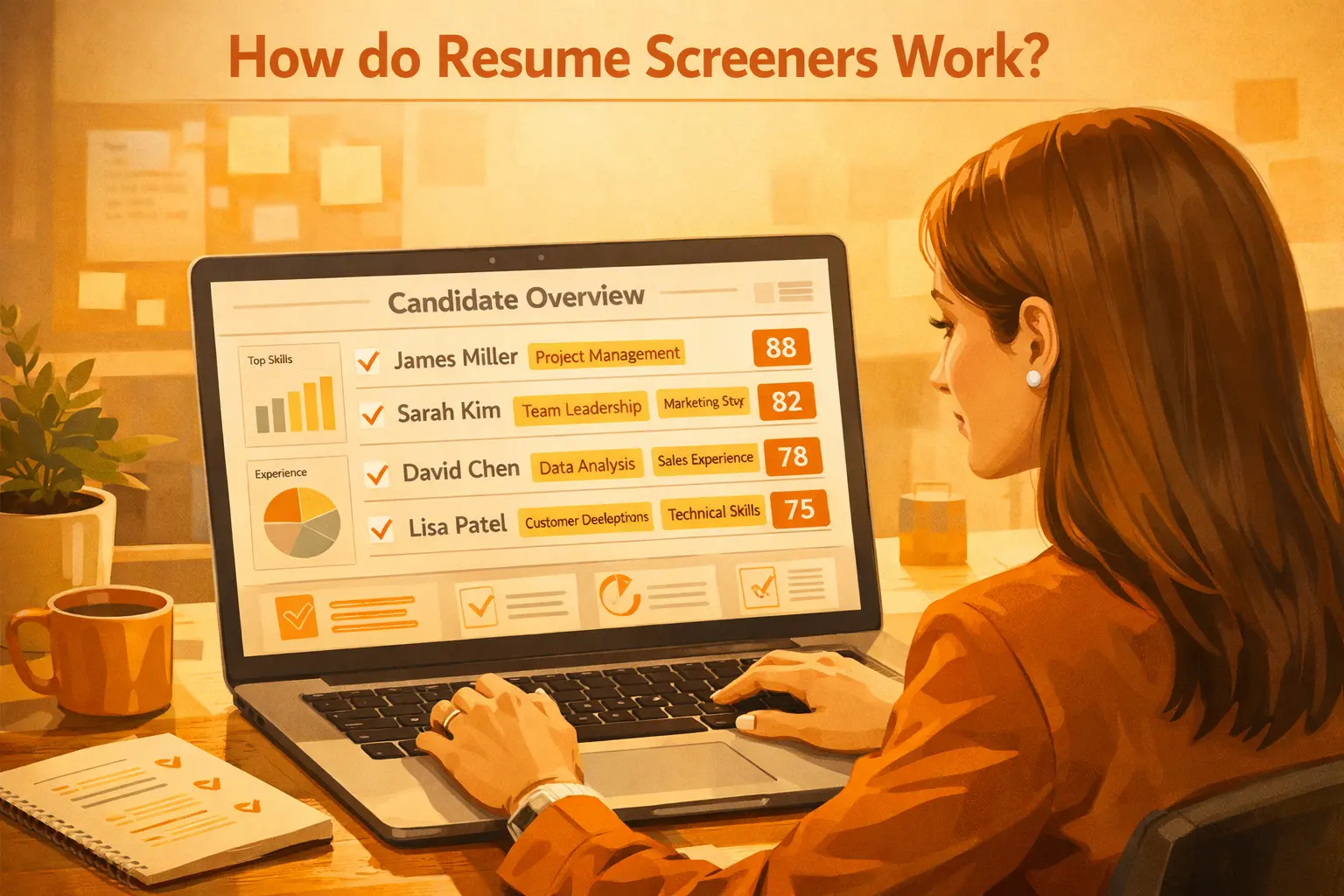

Most modern resume screeners do not automatically reject candidates. Instead, they rank and score them. Here’s what happens in a tool like CVShelf:

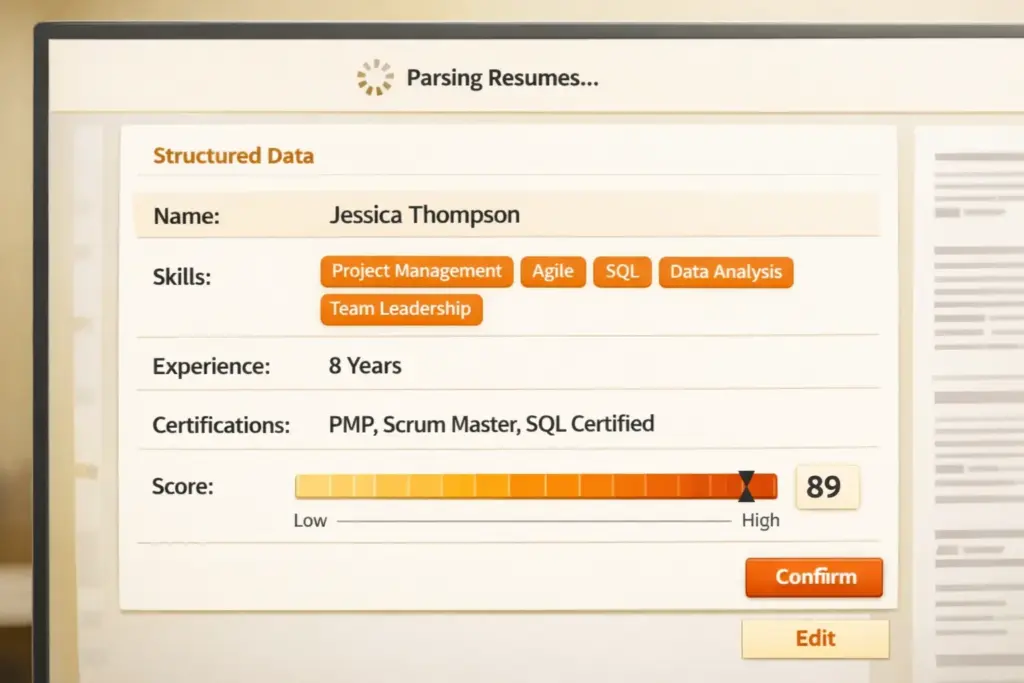

- Resumes are parsed into structured data.

- The system checks for criteria we define.

- Each resume receives a score (e.g., 0–100).

- Candidates are ranked from highest to lowest.

That’s it. No judgment, no intuition, no “understanding” of your career story. The real filtering happens later, when humans only review the top 20–50 candidates and ignore the rest.

That’s the uncomfortable truth most people miss.

Resume Screeners Are Only as Smart as the Humans Behind Them

Here’s the part most recruiters don’t openly discuss: A poorly configured screener can be worse than manually screening resumes. It can systematically eliminate good candidates at scale.

When we configure CVShelf, we decide:

- Which skills are required vs. optional

- How many years of experience matter

- Whether education is a hard filter

- How much weight to give keywords, numbers, or certifications

The system isn’t making decisions. It’s executing our assumptions about what leads to success in a given role. This is why two companies using the same tool can have completely different outcomes. You just need to find which AI recruitment tool works best for you.

3 Myths About Resume Screeners (From People Who Use Them)

Let’s bust a few myths about resume screeners:

Myth #1: “ATS systems automatically reject resumes with formatting issues or missing keywords”

Reality: Most tools don’t reject anything. A resume with imperfect formatting or missing keywords still enters the system; it just scores lower. The real issue isn’t rejection; it’s invisibility. If recruiters only look at the top 20 ranked candidates, being ranked #87 is the same as being rejected.

Myth #2: “Resume screeners use AI that understands experience”

Reality: They don’t. Despite the marketing buzz, most screening systems are still based on keyword matching and rules-based scoring. CVShelf uses some machine learning for parsing resumes, but the criteria are still straightforward:

- “Does this resume mention Python?”

- “Does this resume include 5+ years of experience with this skill?”

- “Does it list the required certifications?”

The system doesn’t read between the lines, infer potential, or make guesses.

Myth #3: “Candidates need to beat the ATS with tricks”

Reality: The screener isn’t an enemy. It’s doing exactly what we programmed it to do. If a candidate is qualified and communicates that clearly, they’ll pass. If their resume is vague or abstract, the system can’t give them credit, even if a human would understand it later.

Real Case Studies from Our Hiring Pipeline

Here are some real examples of what happened in our pipeline:

Case Study 1: The “Bad” Resume That Passed

We once advanced a candidate with a poorly formatted resume:

- Two dense pages

- No bullet points

- Typos

- Inconsistent formatting

But the content was perfect:

- React, Node.js, PostgreSQL, AWS

- “5 years software engineer” clearly stated

- Relevant tools mentioned multiple times

CVShelf gave them a score of 82/100. They aced the interview and got hired. The resume looked bad to humans, but the content was ideal for screening.

One thing we like about CVShelf is the transparency; we can see why someone scored high or low and adjust the logic when it doesn’t align with reality. Our Resume Screener is the best there is.

Case Study 2: The “Good” Resume That Failed

On the flip side, we rejected a beautifully designed resume from a candidate with impressive company names on it. The issue? The language. They used titles like:

- “Growth Ninja”

- “Customer Happiness Hero”

- “Drove engagement”

- “Fostered community”

Our screener was looking for:

- “Customer Success Manager”

- “CRM experience”

- “B2B SaaS”

CVShelf scored them 34/100. They could have been a strong fit, but the system couldn’t match the vague language to the concrete requirements.

Case Study 3: One Line, +30 Points

We coached a friend applying to our company. Here’s how a little more clarity made a huge difference:

Original line:

- “Managed social media campaigns”

Rewritten as:

- “Managed paid social media campaigns (Facebook Ads, LinkedIn Ads) with $50K monthly budget, achieving 2.3% CTR”

Their score jumped from 41 to 71, same experience, just clearer language.

Patterns You Only See at Scale

After reviewing hundreds of resumes per role, we’ve noticed a few patterns:

- Quantified achievements score higher: “Increased revenue” scores lower than “Increased revenue by 34%.”

- Location data can filter people out unintentionally: Even for remote roles, the system sometimes flags candidates living too far from our offices.

About 30% of applicants don’t meet minimum requirements: Despite clear job requirements (like “3+ years of experience”), many applicants apply anyway. Screeners save us a ton of time here.

Where Automation Ends, and Humans Take Over

Here’s the breakdown of where automation stops and humans take over with CVShelf:

Automated:

- Resume parsing

- Keyword matching

- Scoring and ranking

- Filtering for must-have requirements

Human:

- Reviewing the top 30–50 candidates

- Evaluating career progression

- Noticing red flags (job hopping, unexplained gaps)

- Making final decisions on who to interview

A common surprise: Most applicants assume humans read cover letters early. We don’t. Typically, we don’t even see a cover letter unless the candidate has scored well enough to unlock their profile.

Bias: Resume Screeners Reduce It and Amplify It

Resume screeners reflect the biases we encode into them. CVShelf allows us to hide names, photos, and addresses to reduce demographic bias, but bias can still creep in through criteria like:

- “Top 20 university preferred” → socioeconomic bias

- “10+ years of experience” → age bias

- “Fortune 500 background” → bias against startup talent

A Real Example of Bias Amplification:

We initially gave higher scores to candidates with “leadership experience.” Men were more likely to describe themselves as “leading” projects even as individual contributors.

Women more often used words like “collaborated” or “contributed.” This resulted in a gender skew in scores. To fix this, we updated the scoring logic to value collaboration and leadership equally.

What This Means for HR and Recruiting Teams

If you’re using or considering a resume screener, the biggest takeaway is about ownership. You can’t just blame the tool for bad outcomes.

Resume screeners:

- Enforce our assumptions at scale

- Make our hiring criteria explicit (whether we like it or not)

- Expose gaps between what we say we want and what we actually reward

This can be uncomfortable, but it’s also powerful. When screening goes wrong, it’s rarely because the software is “too strict”. More often, it’s because:

- Requirements weren’t truly job-critical

- Language favoured one group’s self-presentation over another’s

- Filters were carried over from outdated job descriptions

- No one reviewed why strong candidates were scoring low

A screener doesn’t absolve us of responsibility; it forces accountability.

How We Think About “Good” Screening Configuration

Here’s the mindset we’ve developed over years of trial and error:

- If we wouldn’t confidently defend a requirement to a hiring manager, it shouldn’t be a hard filter.

- If a criterion correlates more with privilege than performance, it needs scrutiny.

- If the screener surprises us, it’s a signal to review the logic, not override the result.

- If many good candidates score low for the same reason, the system is telling us something important.

The best screeners don’t eliminate thinking; they show us where thinking is needed.

Final Thought

Resume screeners aren’t magic. They’re mirrors. They reflect:

- How clearly we’ve defined success

- How intentional our hiring criteria really are

- Whether our process values signal over polish

Used thoughtfully, they save time, reduce noise, and create consistency. Used carelessly, they amplify blind spots. Either way, they’re doing exactly what we told them to do, and that’s the part worth paying attention to.